Deep Dive Into Kubernetes Networking in Azure

by Roman Sokolkov on May 9, 2018

We started building our azure-operator in the fall of 2017. One of the challenges that we faced was the networking architecture. We evaluated multiple possible architectures and finally chose the one that was best by many parameters. We hope this post will help people setting up their own Azure clusters with decent networking. First let’s look at the available options for Kubernetes networking in Azure.

Calico with BGP

The first option was to use default Calico with BGP. We are using this option in our on-prem and AWS clusters. On Azure we faced some known limitations: IPIP tunnel traffic and unknown IP traffic is not allowed.

Azure Container Network

The second option was to use the Azure Container Networking (ACN) CNI plugin. ACN uses Azure network resources (network interfaces, routing tables, etc.) to provide networking connectivity for containers. Native Azure networking support was really promising, but after spending some time we were not able to run it successfully (issue). We did our evaluation in November 2017, at the moment (May 2018) many things have changed and ACN is used by the Azure official installer now.

Calico Policy-Only + Flannel

The third and most trivial option was to use overlay networking. Canal (Calico policy-only + Flannel) provides us with the benefits of network policies and overlay networking is universal meaning it can run anywhere. This is a very good option. The only drawback is the VxLAN overlay networking, which as with any other overlay has a performance penalty.

Production ready Kubernetes networking on Azure. Our Best Option

Finally the best option was to use a mix of Calico in policy-only mode and Kubernetes native (Azure cloud provider) support of Azure user-defined routes.

This approach has multiple benefits:

- Native Kubernetes network policies backed by Calico (both ingress and egress).

- Network performance (no overlay networking).

- Easy configuration and no operational overhead.

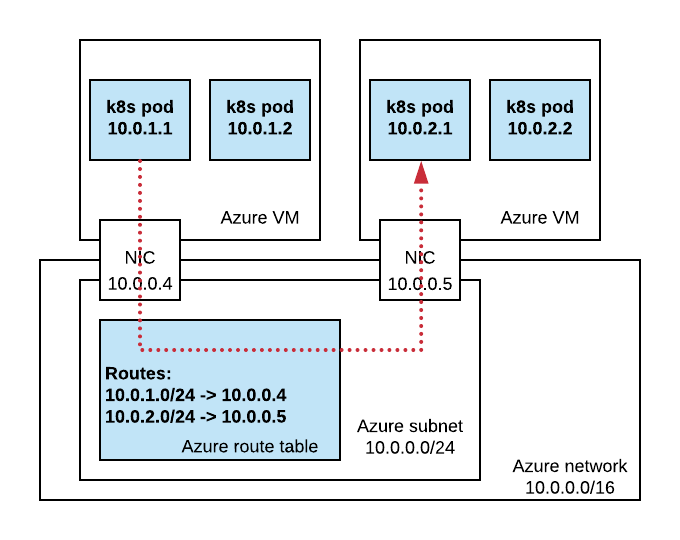

The only difference from default Calico is that BGP networking is disabled. Instead we are enabling node CIDR allocation in Kubernetes. This is briefly described in the official Calico guide for Azure. Responsibility for routing pod traffic is lying with the Azure route table instead of BGP (used by default in Calico). This is shown on the diagram below.

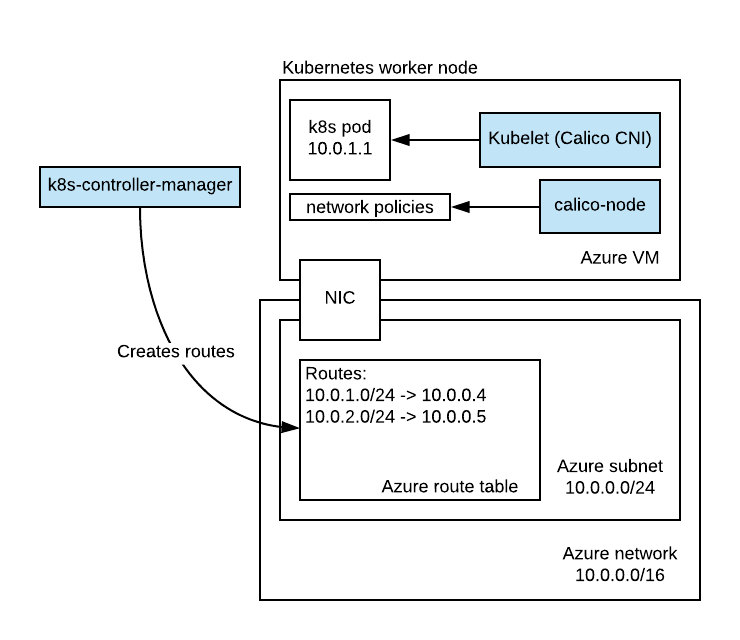

To better understand the services configuration, let’s look at the next diagram below.

- k8s-controller-manager allocates IP blocks for nodes and makes sure to create routes in Azure route table.

- calico-node is responsible for applying network policies. Under the hood it does this by creating iptables rules locally on every node.

- kubelet is responsible for calling Calico CNI scripts. CNI scripts setup the container virtual network interfaces (veth pair in this case) and local IP configuration.

The following checklist can help with configuring your cluster to use Azure route tables with Calico network policies.

- Azure route table created and mapped to subnet(s) with VMs. See how to create an Azure route table here.

- Kubernetes controller manager has

--allocate-node-cidrsset to true and a proper subnet in the--cluster-cidrparameter. The subnet should be a part of Azure virtual network and will be used by pods. For example, for virtual network 10.0.0.0/16 and VMs subnet 10.0.0.0/24 we can pick up the second half of the virtual network, which is 10.0.128.0/17. By default Kubernetes allocates /24 subnet per node which is equal to 256 pods per node. This controlled by--node-cidr-mask-sizeflag. - Azure cloud provider config has

routeTableNameparameter set to the name of the routing table. In general, having a properly configured cloud provider config in Azure is very important, but this is out of scope of this post. All available options for Azure cloud provider can be found here. - Calico is installed in policy-only mode with the Kubernetes datastore backend. This means Calico doesn’t need etcd access, all data stored in Kubernetes. See the official manual for details.

Conclusion

This post has shown some of the Kubernetes networking options in Azure and why we chose the solution we’re using. If you’re running Kubernetes on Azure or thinking about it you can read more about our production ready Kubernetes on Azure. If you’re interested in learning more about how Giant Swarm can run your Kubernetes on Azure, get in touch.

You May Also Like

These Related Stories

Part 2: Creating the Microservice Application with the Cloud-Native Stack

An in-depth series on how to easily get centralized logging, better security, performance metrics, and authentication using a Kubernetes-based platfor …

Application Configuration Management in Kubernetes

Generally, cloud-native applications are characterized by small, independent units of functionality called microservices packaged into containers. Arc …

Production-grade Kubernetes Now in an Azure Region near You

We’re thrilled to announce that Giant Swarm is now available on Azure. This adds to the support we already have for AWS and on-premise installations a …